How to create a static web server with Azure Storage and Cloudflare?

In Juntoz.com, we have many websites to run and because of that, we have also many files that need to be shared across all of them. Some of these files are images, css files, js files, etc.

Inserting many of these as npm packages would be overengineering it, and for other cases, where it might be more suitable, there is the “problem” having to upgrade them in many applications when a new version comes out (yet, you can still create a new version of each file if you want to).

To solve this problem, we will create a “poor man” web server by using Azure Storage and Cloudflare.

The main benefit is the very low money cost of this solution. If I would create a real web server, it would cost me for every second it is up. In this case, Azure Storage charges me by the byte transmitted and stored, which if we use Cloudflare with caching activated, then it would be even less.

This article will describe the steps we need to perform to accomplish this from repo to production.

Prerequisites:

- A Cloudflare account with a domain

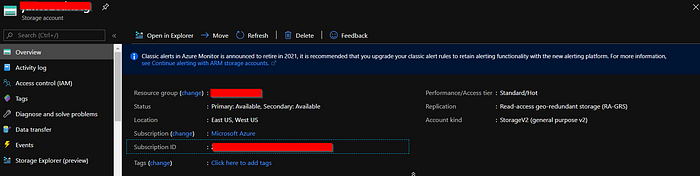

- An Storage account in Azure

- A git repository (in our case is in Azure Repos)

Acknowledgements

This link was the key to solve this problem, so big thanks to Brett Mckenzie for your blog entry.

https://brettmckenzie.net/2020/03/23/azure-pipelines-copy-files-task-authentication-failed

Let’s begin

For this example, we will have a javascript component that needs to be published, therefore we have the source files which will pass thru webpack to generate some dist files.

The repo would look like this:

src\

---\index.js

azure-pipelines.yml

package.jsonAnd this would be our azure-pipelines.yml:

stages:

- stage: Build

jobs:

- job: Build

pool:

vmImage: windows-2019

steps:

- script: |

npm ci

npm run build

displayName: build

- task: CopyFiles@2

displayName: stage deploy files

inputs:

contents: $(build.sourcesDirectory)/dist/*.js

flattenFolders: true

targetFolder: $(build.artifactStagingDirectory)

- task: PublishBuildArtifacts@1

displayName: publish output folder as this build artifact

inputs:

pathtoPublish: $(build.artifactStagingDirectory)

artifactName: drop

- stage: Staging

jobs:

- deployment: Staging

pool:

vmImage: windows-2019

environment: STG

strategy:

runOnce:

deploy:

steps:

- task: AzureFileCopy@4

inputs:

sourcePath: $(Pipeline.Workspace)\drop\*

azureSubscription: my-subscription-service-connection

destination: azureBlob

storage: mystrg

containerName: mycontainer

- stage: Production

jobs:

- deployment: Production

pool:

vmImage: windows-2019

environment: PROD

strategy:

runOnce:

deploy:

steps:

- task: AzureFileCopy@4

inputs:

sourcePath: $(Pipeline.Workspace)\drop\*

azureSubscription: my-subscription-service-connection

destination: azureBlob

storage: mystrg

containerName: mycontainerSome notes:

- The stage Build will pick up the files and build them using

npm run buildwhich will generate adistfolder that contains all the js files needed (this is our example, probably it will be different for your case). - Then these files will be pushed as the pipeline’s artifacts.

- At the stage

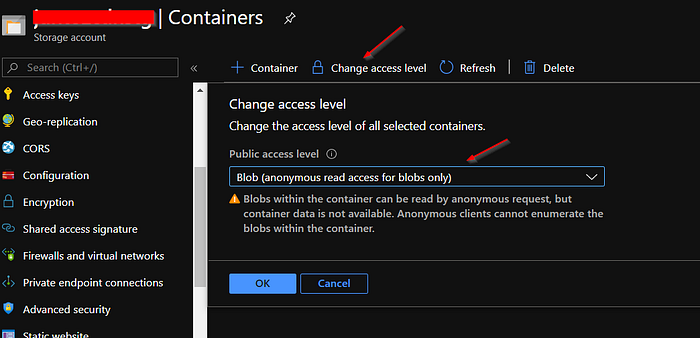

Staging, the pipeline artifacts will be picked up and then pushed to the staging storage account usingAzureFileCopy@4. - At this step, you set your Azure storage account

mystrgand the container namemycontainer(that you previously configured). - At the stage

Production, the same steps are executed but the target is the production storage account.

If you run the pipeline script as is, you will get this error (this is for all developers banging their heads like I did that got this error and couldn’t figure out what was going on):

INFO: Authentication failed, it is either not correct, or expired, or does not have the correct permission -> github.com/Azure/azure-storage-blob-go/azblob.newStorageError, /home/vsts/go/pkg/mod/github.com/!azure/azure-storage-blob-go@v0.7.0/azblob/zc_storage_error.go:42

===== RESPONSE ERROR (ServiceCode=AuthorizationPermissionMismatch) =====

Description=This request is not authorized to perform this operation using this permission.

RequestId:92002da5-401e-0014-253a-39c1f3000000

Time:2020-06-03T00:05:17.6213183Z, Details:

Code: AuthorizationPermissionMismatch

PUT https://mystrg.blob.core.windows.net/mycontainer/myfile.js?timeout=901

Authorization: REDACTED

Content-Length: [169383]Which, at first, I thought it was going to be solved with Shared Access Signature in that storage account. However, it was not the solution (it seems SAS should be used for REST operations on the storage, and not with AzCopy).

I tried different approaches, even trying to call azcopy command in the pipeline but it was not present. Installing it was just too much.

Lucky for me, I found this link https://brettmckenzie.net/2020/03/23/azure-pipelines-copy-files-task-authentication-failed which explained exactly the reason of this error.

Authorize

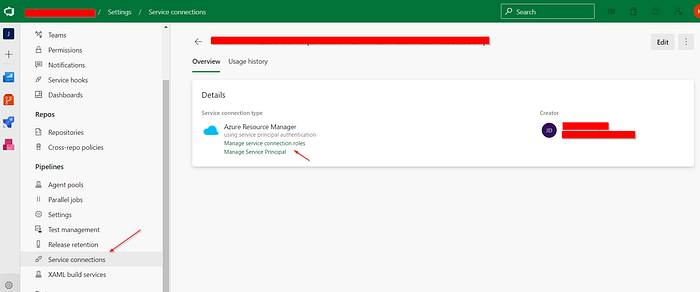

To solve it, you have to go to your Azure Devops project configuration, go to Service Connections, and open the one that you are using in your task (azureSubscription: my-subscription-service-connection).

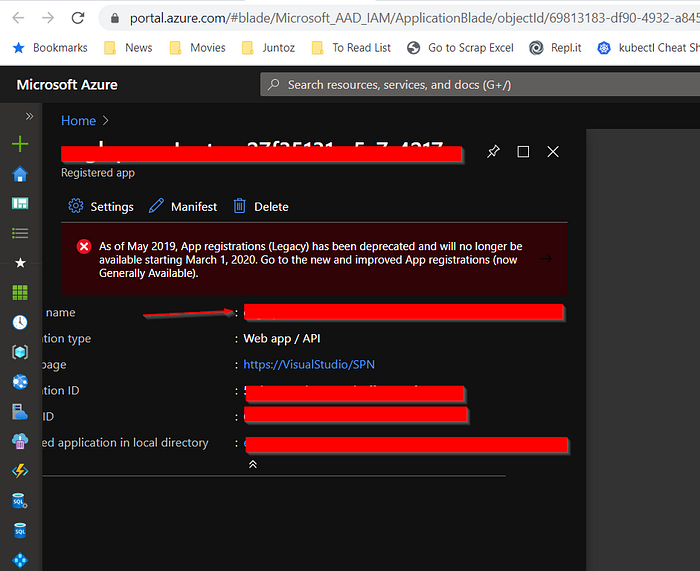

Go to Manage Service Principal , this will open Azure Portal. There, simply write down the internal service account name.

Return back to your service connection, and click on Manage service roles .

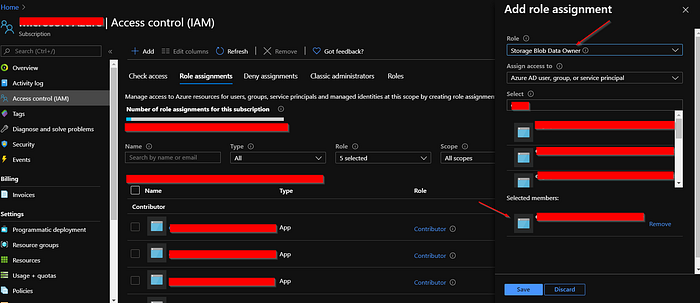

This will open your Azure Subscription roles management blade. And there you have to insert a new role assignment that service account name with the two roles “Storage Blob Data Owner” and “Storage Blob Data Contributor”.

Once you save your changes, go back to your pipeline, and execute it again.

This time it should pass through without errors.

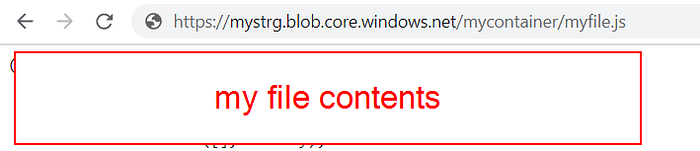

And your file is now published!

If you use the domain mystrg.blob.core.windows.net, you need to use https.

Using your own domain

If you want to use your own domain, then you must do some more steps.

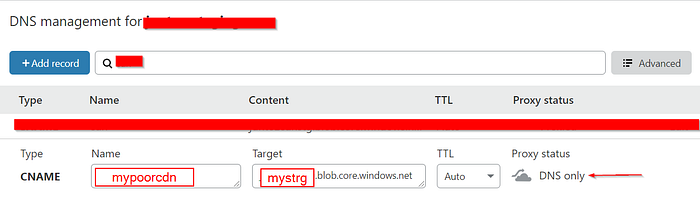

The first step you need to do is to go to your DNS settings and create the corresponding CNAME to map it to your blob storage. NOTE: in our case, we will map it to a subdomain.

In the case of Cloudflare, create a CNAME with the following info:

Name: mypoorcdn

Target: mystrg.blob.core.windows.net

TTL: Auto

Proxy Status: DNS Only

If you do not use Cloudflare, then the field Proxy status is not important. Yet, if you use it, you do have to mark it as DNS Only. This is very important.

Then go to your Azure storage account, Custom Domain, and set your domain as you just created: mypoorcdn.mydomain.com.

NOTE: I’ve seen reports at this step that if you don’t use DNS Only, you will not be able to assign the custom domain.

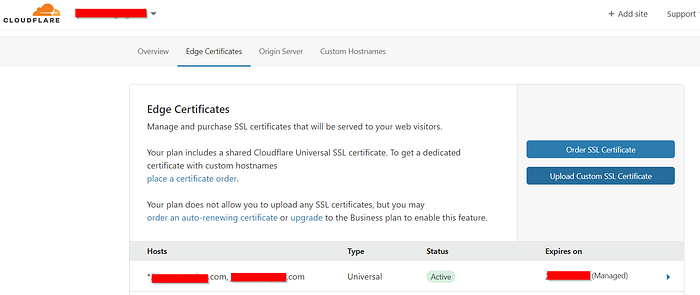

Custom domains in Azure storage has one drawback, it can only serve http. Based on MS documentation, to enable https, you would have to create an Azure CDN with its inherent money costs.

So if you use https://mypoorcdn.mydomain.com/mycontainer/myfile.js

Then you would see a “https not supported” error. Bummer.

Here comes Cloudflare to the rescue!

Cloudflare can provide a TLS certificate at the edge, and guess how would that be activated?.

By changing your Proxy Status to Proxied. This sets a proxy on top of your subdomain and will allow https to flow without issues.

So now if you call https://mypoorcdn.mydomain.com/mycontainer/myfile.js, then you will see the safe lock in your browser showing nicely.

Additionally, you might need to activate the CORS for the clients of your files.

Conclusion

Having a cheap https static web server is possible to do with Azure Storage and Cloudflare. The costs are very low and with Cloudflare caching the responses, it is even lower.

Happy coding!